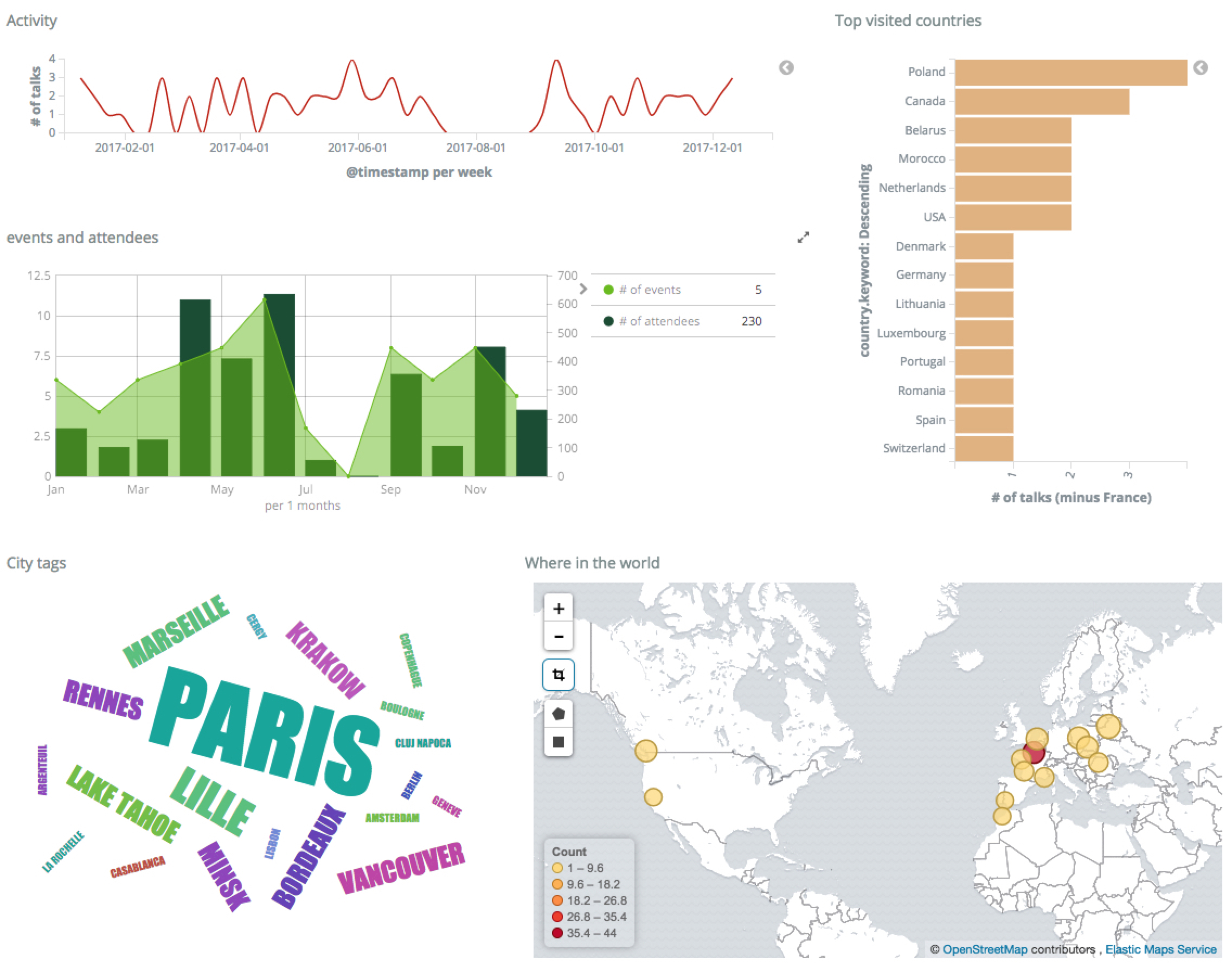

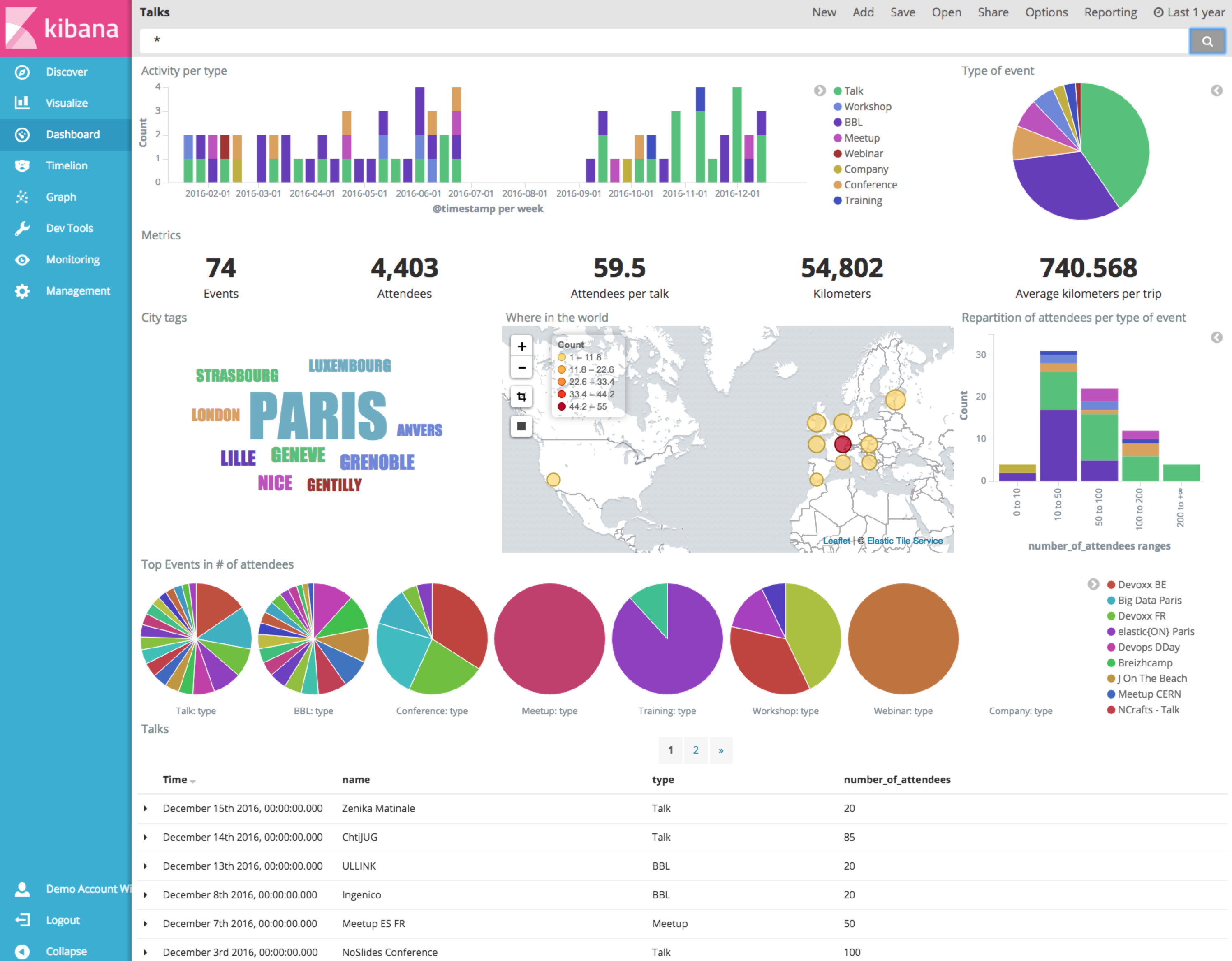

I discovered Elasticsearch project in 2011. After contributed to the project and created open source plugins for it, David joined elastic the company in 2013 where he is Developer and Evangelist. He also created and still actively managing the French spoken language User Group. At elastic, he mainly worked on Elasticsearch source code, specifically on open-source plugins. In his free time, he likes talking about elasticsearch in conferences or in companies (Brown Bag Lunches AKA BBLs ). He is also author of FSCrawler project which helps to index your pdf, open office, whatever documents in elasticsearch using Apache Tika behind the scene.

Elasticsearch

35 articles

Ai

Ant

Bbl

Beats

Brownbaglunch

Career

Cloud

Community

Conference

Couchdb

Culture

Cursor

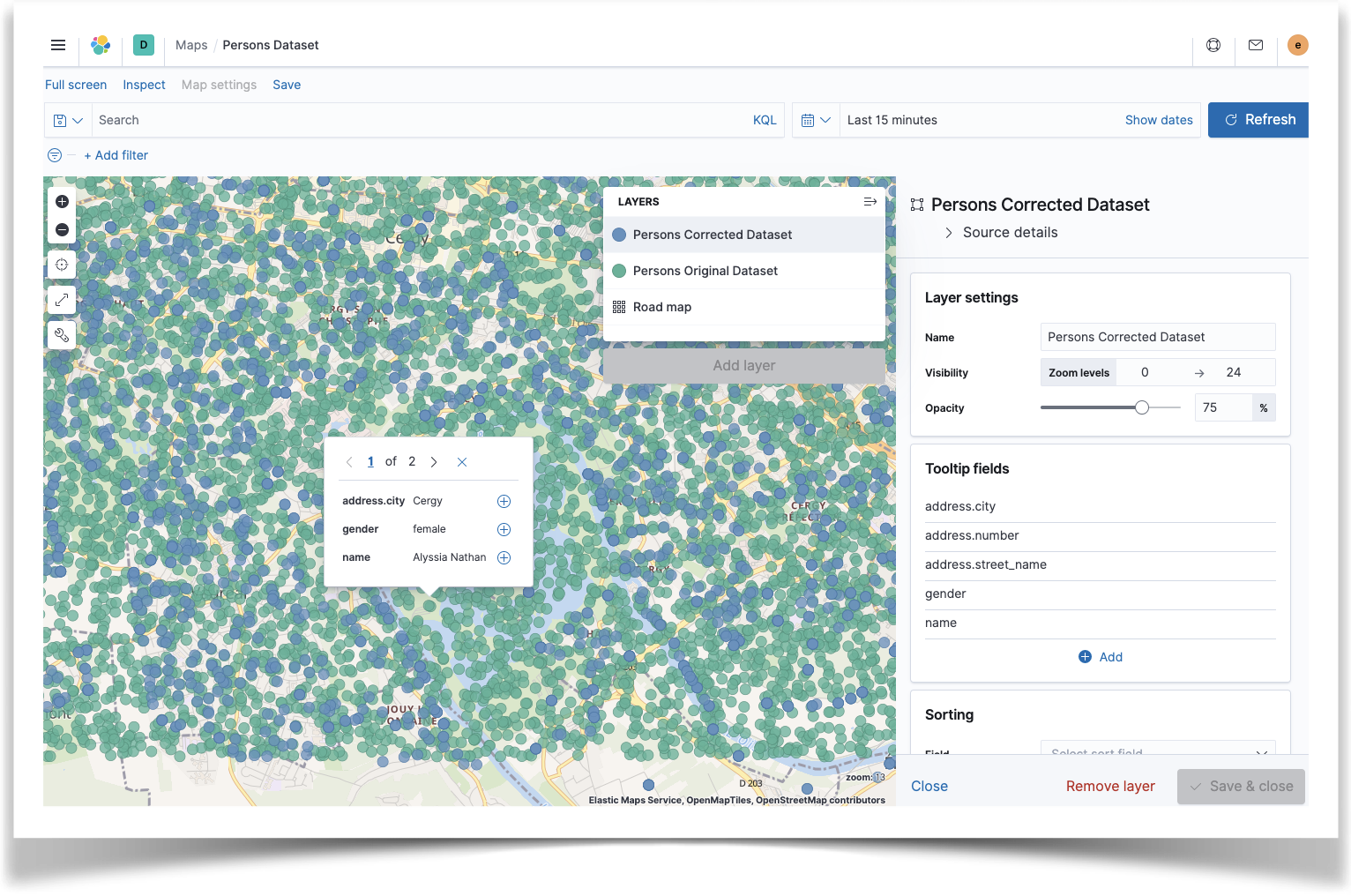

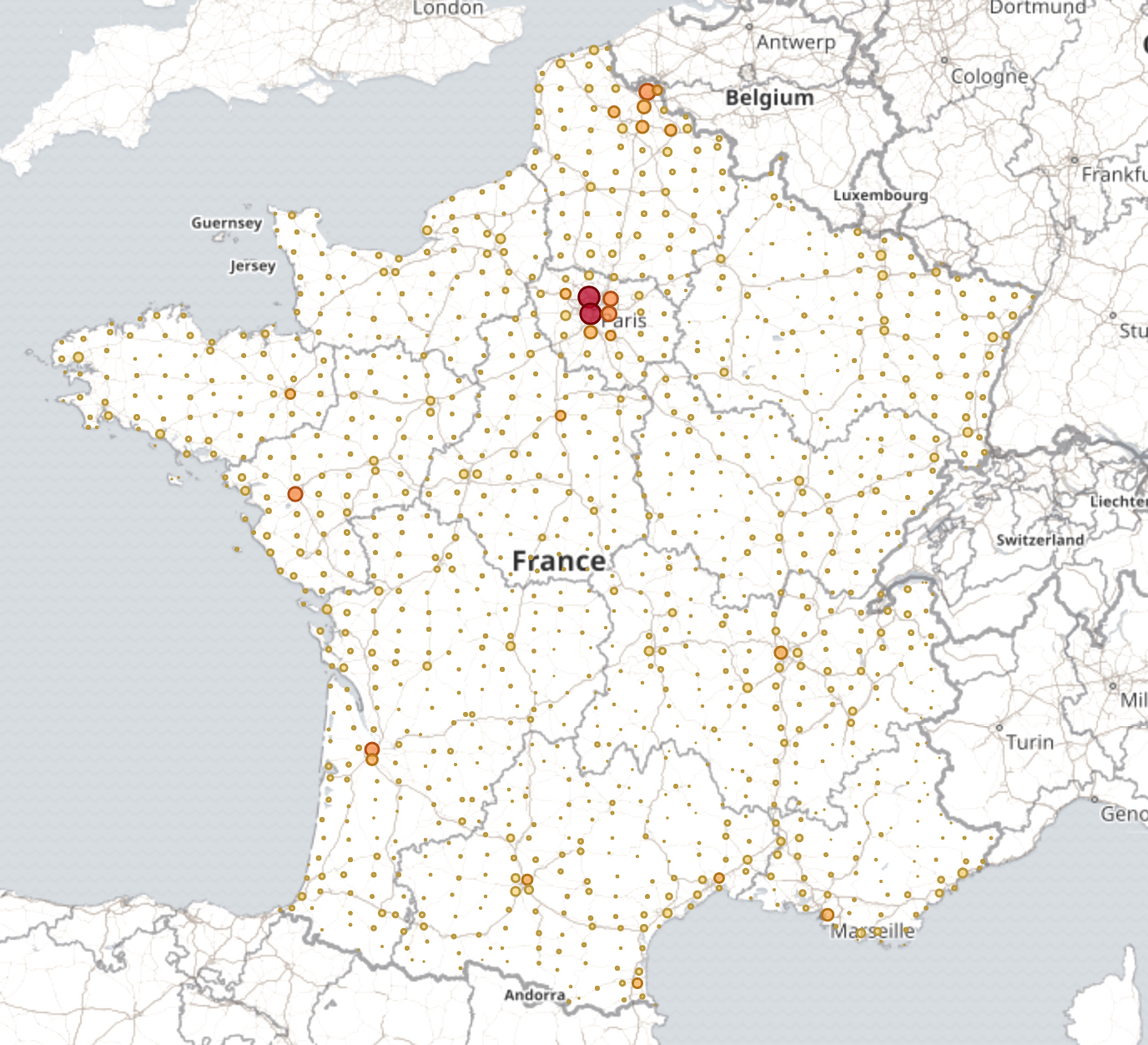

Dataset

Documentation

Elastic-Cloud

Elasticfr

Elasticsearch

Enterprise Search

Found

Fusionforge

Gforge

Github

Go

Google

Hibernate

Hugo

Java

Jetty

Js

Kibana

Logstash

Mahout

Maven

Meetup

Mysql

Plugin

Recommandation

Reindex

Spring

Sql

Test

Travels

Twitter

Details

Who am I?

Developer | Evangelist at elastic and creator of the Elastic French User Group . Frequent speaker about all things Elastic, in conferences, for User Groups and in companies with BBL talks . In my free time, I enjoy coding and deejaying as DJ Elky , just for fun. Living with my children in Cergy, France.

Social Links